OpenShift 4: Splunk HEC Integration

-

Muhammad Aizuddin Zali

Muhammad Aizuddin Zali

- Cloud, Open shift, Hec

- March 4, 2020

NOTE : This article focused on technical feasibility and features. For production usage this require detail implementation discussion.

Objective.

We want to ship containers and apiserver audit log, objects and K8s metrics ( not OpenShift monitoring metrics) to external Splunk via HEC .

Prereq.

- Helm3 (Latest, and avoid tiller security issues)

- Splunk (we use free Splunk with internal Splunk self signed certificate)

Steps.

NOTE : Ensure you are logged in to cluster as cluster-admin via oc client prior run below steps.1. Go and [get free splunk](https://www.splunk.com/en_us/download/splunk-enterprise.html?utm_campaign=google_apac_south_en_search_brand&utm_source=google&utm_medium=cpc&utm_content=Splunk_Enterprise_Demo&utm_term=splunk&_bk=splunk&_bt=364789567862&_bm=p&_bn=g&_bg=75654886508&device=c&gclid=EAIaIQobChMIt7qLx8aA6AIVwRuPCh39eggzEAAYASADEgJOZ_D_BwE) and we are going to install it.

- We have the RPM ready and execute the installation:

#> ls -rlt splunk*

-rw-r--r--. 1 root root 510124954 Feb 13 00:48 splunk-8.0.2-a7f645ddaf91-linux-2.6-x86_64.rpm

#> rpm -ivh splunk-8.0.2-a7f645ddaf91-linux-2.6-x86_64.rpm

warning: splunk-8.0.2-a7f645ddaf91-linux-2.6-x86_64.rpm: Header V4 RSA/SHA256 Signature, key ID b3cd4420: NOKEY

Verifying... ################################# [100%]

Preparing... ################################# [100%]

Updating / installing...

1:splunk-8.0.2-a7f645ddaf91 ################################# [100%]

complete

- Start splunk server:

#> cd /opt/splunk/bin

#> ./splunk start --accept-license

This appears to be your first time running this version of Splunk.

Splunk software must create an administrator account during startup. Otherwise, you cannot log in.

Create credentials for the administrator account.

Characters do not appear on the screen when you type in credentials.

Please enter an administrator username: admin

Password must contain at least:

* 8 total printable ASCII character(s).

Please enter a new password:

Please confirm new password:

Copying '/opt/splunk/etc/openldap/ldap.conf.default' to '/opt/splunk/etc/openldap/ldap.conf'.

Generating RSA private key, 2048 bit long modulus

........................................................................+++++

.....................................................................+++++

e is 65537 (0x10001)

writing RSA key

Generating RSA private key, 2048 bit long modulus

.+++++

..+++++

e is 65537 (0x10001)

writing RSA key

Moving '/opt/splunk/share/splunk/search_mrsparkle/modules.new' to '/opt/splunk/share/splunk/search_mrsparkle/modules'.

Splunk> Another one.

Checking prerequisites...

Checking http port [8000]: open

Checking mgmt port [8089]: open

Checking appserver port [127.0.0.1:8065]: open

Checking kvstore port [8191]: open

Checking configuration... Done.

Creating: /opt/splunk/var/lib/splunk

Creating: /opt/splunk/var/run/splunk

Creating: /opt/splunk/var/run/splunk/appserver/i18n

Creating: /opt/splunk/var/run/splunk/appserver/modules/static/css

Creating: /opt/splunk/var/run/splunk/upload

Creating: /opt/splunk/var/run/splunk/search_telemetry

Creating: /opt/splunk/var/spool/splunk

Creating: /opt/splunk/var/spool/dirmoncache

Creating: /opt/splunk/var/lib/splunk/authDb

Creating: /opt/splunk/var/lib/splunk/hashDb

New certs have been generated in '/opt/splunk/etc/auth'.

Checking critical directories... Done

Checking indexes...

Validated: _audit _internal _introspection _metrics _telemetry _thefishbucket history main summary

Done

Checking filesystem compatibility... Done

Checking conf files for problems...

Done

Checking default conf files for edits...

Validating installed files against hashes from '/opt/splunk/splunk-8.0.2-a7f645ddaf91-linux-2.6-x86_64-manifest'

All installed files intact.

Done

All preliminary checks passed.

Starting splunk server daemon (splunkd)...

Generating a RSA private key

....+++++

..+++++

writing new private key to 'privKeySecure.pem'

-----

Signature ok

subject=/CN=bastion.local.bytewise.my/O=SplunkUser

Getting CA Private Key

writing RSA key

Done

[ OK ]

Waiting for web server at # to be available.... Done

If you get stuck, we're here to help.

Look for answers here: http://docs.splunk.com

The Splunk web interface is at #

Do not forget to open up a firewall rules for 8000/tcp 8089/tcp 8191/tcp 8088/tcp. Or any other custom port you configured.4. Install helm as Splunk HEC will depending on helm to deploy the HEC stack on OpenShift.

- Next create a project for splunk HEC called “splunk-connect”.

#> oc new-project splunk-connect

#> oc project splunk-connect

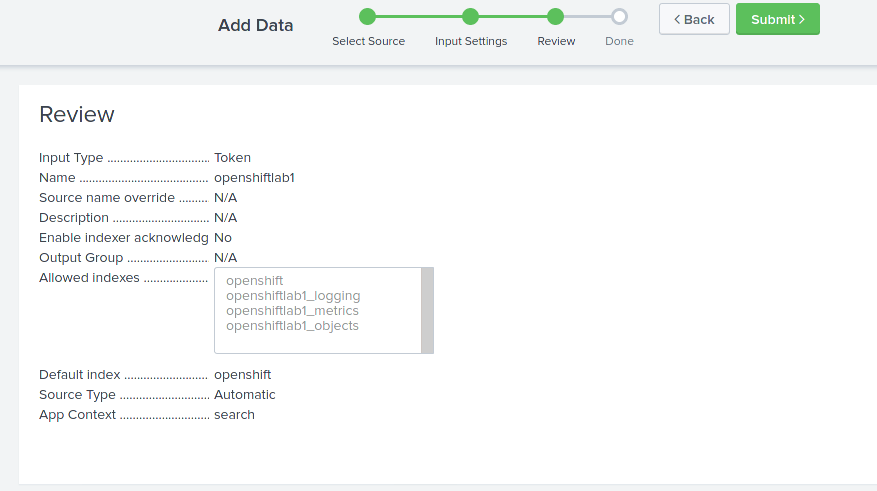

- Create new index as per below list. Settings > Indexes > New Index

- openshift (events)

- openshiftlab1_logging (events)

- openshiftlab1_metrics (metrics)

- openshiftlab1_object (events)

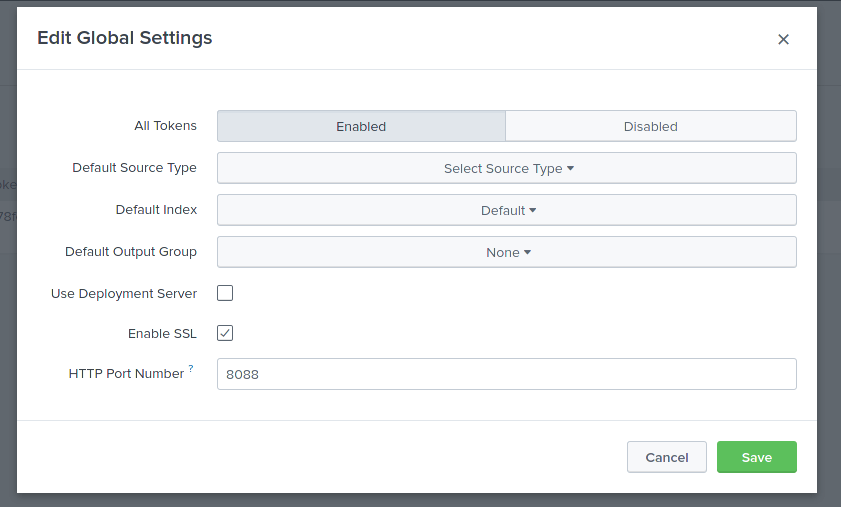

- Enable HEC (HTTP Event Collector), Settings > Data Inputs > HTTP Event Collector > Global Settings > Default Index as “Default” > Save .

#> cat splunk-hec.yaml

global:

logLevel: info

journalLogPath: /run/log/journal

splunk:

hec:

host: bastion.local.bytewise.my

port: 8088

token: xxxxxxxxxxxxxxxxxx

protocol: https

indexName: openshift

insecureSSL: true

#clientCert:

#clientKey:

#caFile:

kubernetes:

clusterName: "openshiftlab"

openshift: true

splunk-kubernetes-logging:

enabled: true

logLevel: debug

splunk:

hec:

host: bastion.local.bytewise.my

port: 8088

token: xxxxxxxxxxxxxxxxxx

protocol: https

indexName: openshiftlab1_logging

insecureSSL: true

#clientCert:

#clientKey:

#caFile:

containers:

logFormatType: cri

logs:

kube-audit:

from:

file:

path: /var/log/kube-apiserver/audit.log

splunk-kubernetes-metrics:

enabled: true

splunk:

hec:

host: bastion.local.bytewise.my

port: 8088

token: xxxxxxxxxxxxxxxxxx

protocol: https

indexName: openshiftlab1_metrics

insecureSSL: true

#clientCert:

#clientKey:

#caFile:

kubernetes:

openshift: true

splunk-kubernetes-objects:

enabled: true

kubernetes:

openshift: true

splunk:

hec:

host: bastion.local.bytewise.my

port: 8088

token: xxxxxxxxxxxxxxxxxx

protocol: https

insecureSSL: true

indexName: openshiftlab1_objects

#clientCert:

#clientKey:

#caFile:

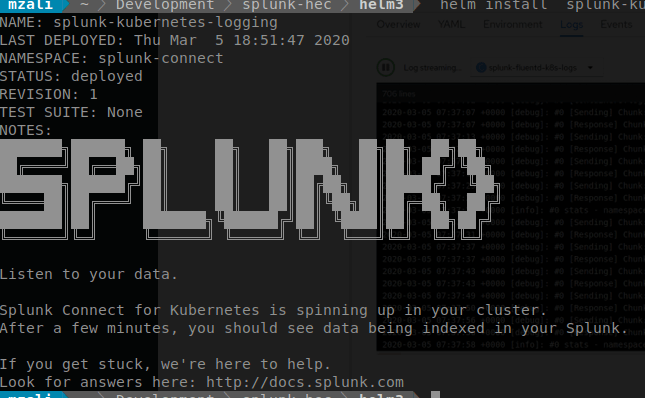

- At this stage we are now ready to deploy Splunk HEC via Helm3 cli:

#> helm install splunk-kubernetes-logging -f splunk-hec.yaml https://github.com/splunk/splunk-connect-for-kubernetes/releases/download/1.4.0/splunk-connect-for-kubernetes-1.4.0.tgz

#> oc get pods

NAME READY STATUS RESTARTS AGE

splunk-kubernetes-logging-76l9k 1/1 Running 0 68s

splunk-kubernetes-logging-gmjrm 1/1 Running 0 68s

splunk-kubernetes-logging-sbtx7 1/1 Running 0 68s

splunk-kubernetes-logging-splunk-kubernetes-metrics-2vtbm 1/1 Running 0 68s

splunk-kubernetes-logging-splunk-kubernetes-metrics-6jjmh 1/1 Running 0 69s

splunk-kubernetes-logging-splunk-kubernetes-metrics-agg-662qp7q 1/1 Running 0 69s

splunk-kubernetes-logging-splunk-kubernetes-metrics-m6qwj 1/1 Running 0 69s

splunk-kubernetes-logging-splunk-kubernetes-metrics-mdr65 1/1 Running 0 68s

splunk-kubernetes-logging-splunk-kubernetes-metrics-pg2zr 1/1 Running 0 69s

splunk-kubernetes-logging-splunk-kubernetes-objects-7b9864jw9wh 1/1 Running 0 69s

splunk-kubernetes-logging-xgmh8 1/1 Running 0 68s

splunk-kubernetes-logging-zvrzn 1/1 Running 0 69s

IMPORTANT : Ensure all Splunk deployment service account has Privileged SCC. e.g

# > oc adm policy add-scc-to-user privileged -z splunk-hec-splunk-kubernetes-logging -z splunk-hec-splunk-kubernetes-objects

securitycontextconstraints.security.openshift.io/privileged added to: ["system:serviceaccount:splunk-k8s:splunk-hec-splunk-kubernetes-logging" "system:serviceaccount:splunk-k8s:splunk-hec-splunk-kubernetes-objects"]

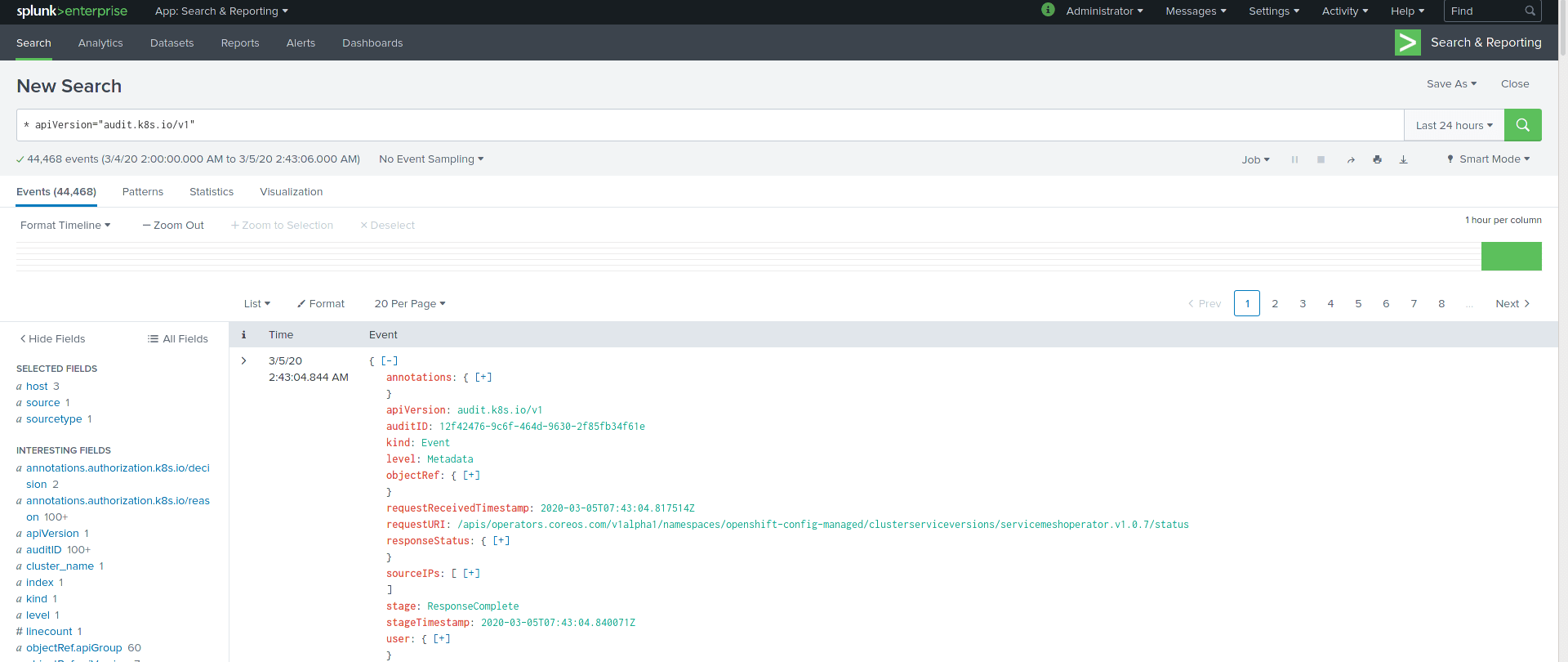

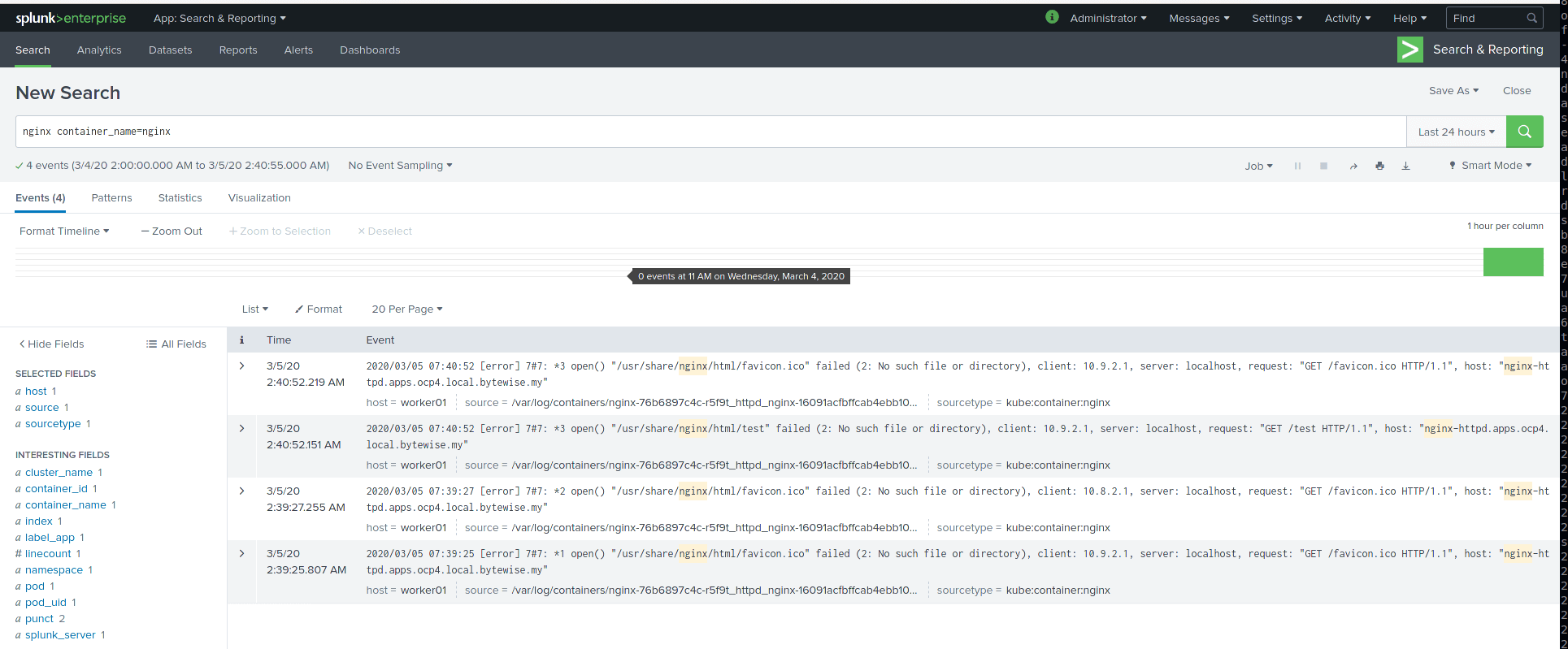

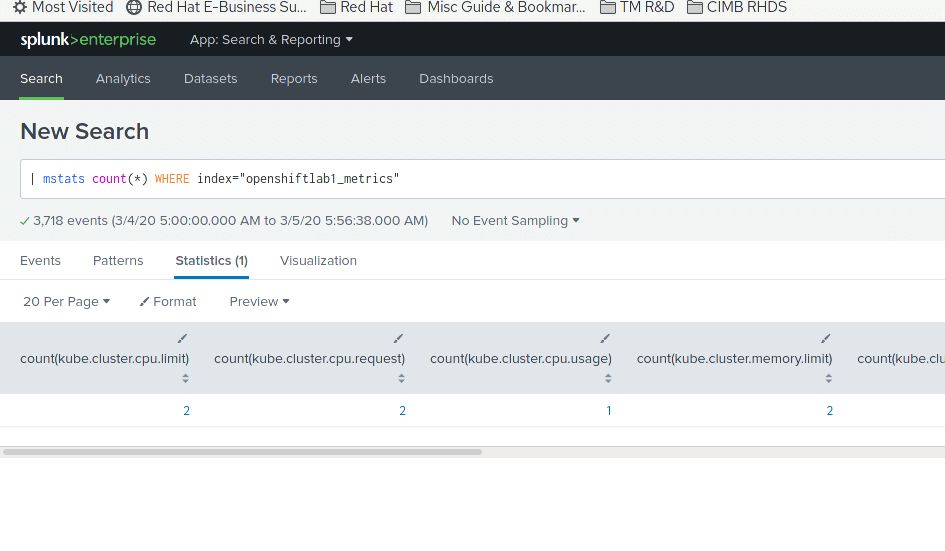

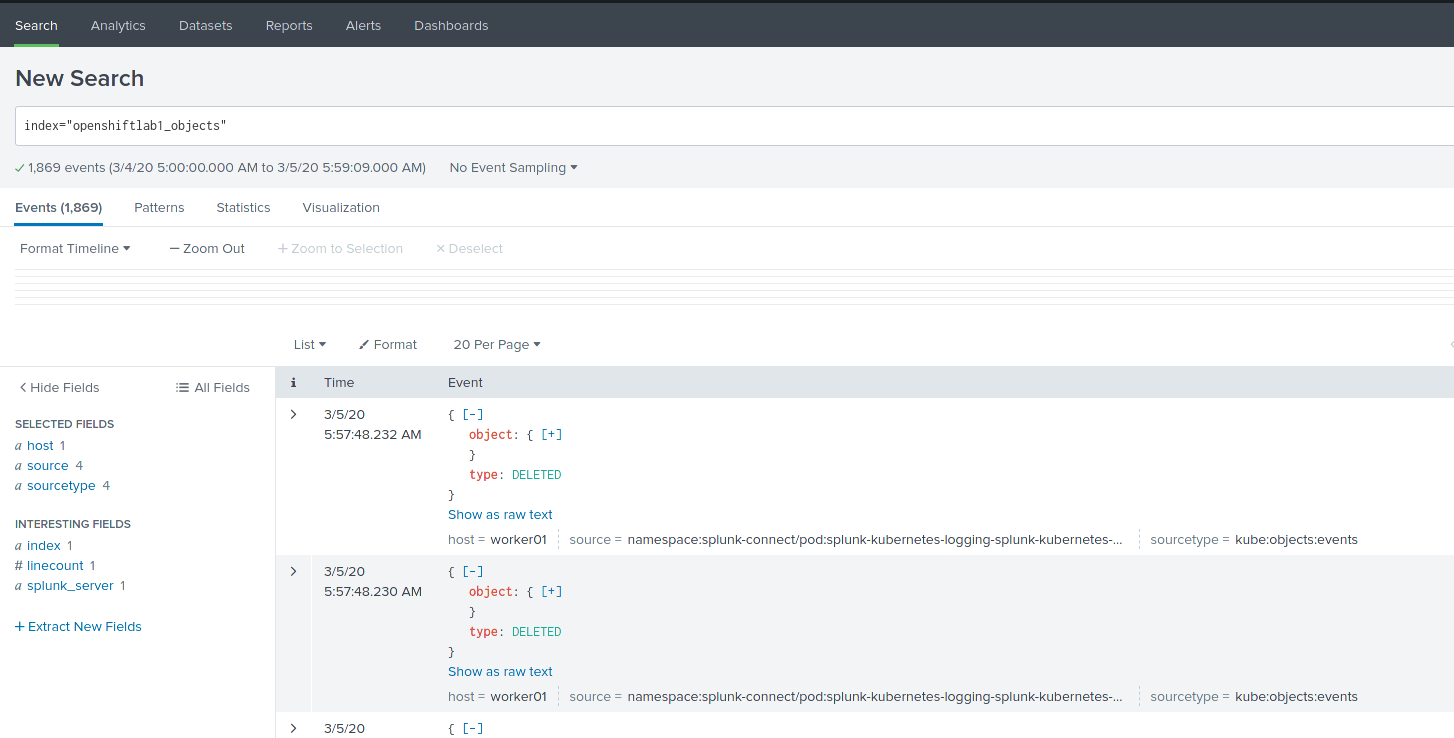

- Do index and metric search from UI and we can see data ingested and indexed properly on Splunk:

Summary

Having centralized and aggregated SIEM is essential as part of organization infrastructure defends. Splunk is one of the well-known SIEM solution. We just go trough how OpenShift 4 via Splunk HEC can pipe logs, metrics and some k8s object off cluster to Splunk.

Muhammad Aizuddin Zali

RHCA | AppDev & Platform Consultant | DevSecOps

Note

Disclaimer: The views expressed and the content shared in all published articles on this website are solely those of the respective authors, and they do not necessarily reflect the views of the author’s employer or the techbeatly platform. We strive to ensure the accuracy and validity of the content published on our website. However, we cannot guarantee the absolute correctness or completeness of the information provided. It is the responsibility of the readers and users of this website to verify the accuracy and appropriateness of any information or opinions expressed within the articles. If you come across any content that you believe to be incorrect or invalid, please contact us immediately so that we can address the issue promptly.